Check out all you need to know about Rotobot Nuke plugin.

We interviewed Sam Hodge, founder of Kognat – Deep Learning Tools for Visual Effects. Sam has developed Rotobot OpenFX plugin for Nuke using deep learning. In deep learning, robots / machines learn human behaviour and it replicate them. For the same, algorithm of neural networks are used. Using these advanced AI (Artificial Intelligence) based neural networks; machines understand, learn, adapt and execute the work / process that are done by humans in real life. Such new age robotics system can increase productivity of our post production pipeline on drastic level.

Read on Sam’s detailed interview below.

I have spent my life following trends of things that I am interested in. Initially that led me to recombinant DNA manipulation, then to computer graphics for Hollywood feature films. Artificial Intelligence as a concept has fascinated me since the late 80s. But since the frameworks have been made easy enough to use with a bit of computer science background, I wanted to make these tools available to anybody who can run an installer and use the GUI. Rotoscopy seemed to be the ideal task to automate using AI.

Welcome Sam. Please let us know about your academic and professional credentials.

My qualification are varied, I have an Honours Degree in Molecular Biology straight out of high school, where I worked as a genetic engineer on barley enzymes in the beer making process as well as research into growth factor gene expression in the gut in a Children’s Hospital environment. During this time I had access to high end workstations for visualizing molecular structures. This lead me to realize that I should have never dropped art in high school, so I compiled ray tracing software and ran it on mainframes I had access to around the labs.

I was passionate about telling stories with computers so I retrained into interactive multimedia and computer graphics. When I graduated the dot com boom was in full effect, so I worked for a startup making a humanoid with detailed muscle simulations for the fitness and health industries. Other jobs in digital media followed until I moved into film for Hollywood in 2003. Since then I have worked on about 30 feature films including two Visual Effects Oscars from three nominations. Roles have been around character setup initially, then followed by lighting, a stint at CG Supervision, followed by asset supervisions and pipeline software development.

From 2013 – 2017 while working full-time, I took a post graduate diploma in computer science, there is a very strong Artificial Intelligence and Machine Learning department there with a background in Computer Vision. Although I have written code since an early age, I was always self taught. I wanted to know the details of the process of creating software. On completing my studies I took on Kognat as a side business while continuing working full time at Rising Sun Pictures (RSP).

Kindly explain about Rotobot Nuke plugin in detail.

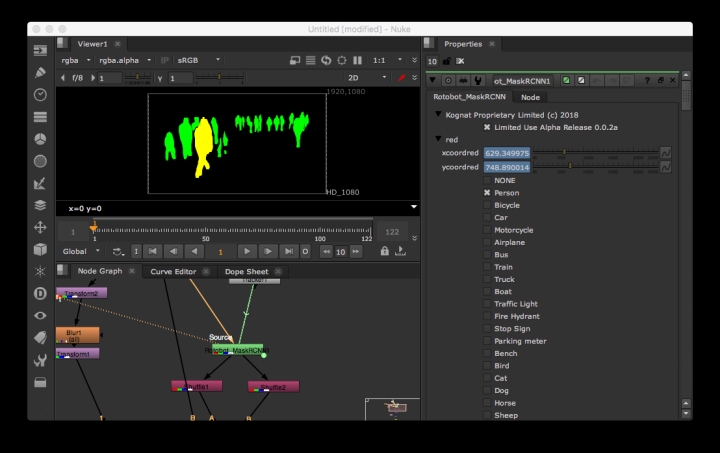

Rotobot uses computer vision algorithms to detect which pixels belong to a semantic class. So you ask the knowledge base, “Which pixels belong to the class “person”?”, the knowledge base responds by labeling the pixels which are person. There are actually two nodes, instance segmentation, where each person in a crowd could be isolated from others in a crowd. Segmentation labels all the pixels that belong to all the people in a crowd and puts it into one labelled group of pixels. So person is not the only class that is available, there are 20 classes in the segmentation, and 80 in instance segmentation.

The user interface is pretty simple, you just ask which classes and instance of classes, via a pixel coordinate should go into the red green and blue channels respectively. You can then use these single channel masks to create effects. The algorithms uses are deep neural network models, that are trained on many images of ground truths, the deep learning model then minimised the error or loss between the ground truth and the prediction from the model. The training uses GPUs training for days at a time, the training can be captured in the model that is delivered with the Rotobot software. Further training will continue at Kognat.

Are you the sole founder and owner of ‘Kognat Proprietary Limited’ or is it in partnership? What means ‘Kognat’?

There are two equal shareholders in Kognat, which is limited by shares. But I am the sole founder, I am coming to the realisation that as the company grows we are going to need to think about delegating more task to allow for faster growth and try out other ideas and improvements. There is a lot of talent in the AI and graphics community in Adelaide. The startup scene is also well established, so finding the right people should be straightforward.

Kognat is a nod to the word cognitive, relating to thinking and thereby machine learning. The logo with the cog, puts the machine into machine learning. It was also six letter .com and .com.au address that had yet to be registered.

Please explain to our readers the concept of ‘semantic segmentation’.

Semantic segmentation is the process of using a deep neural network to train a algorithmic model to learn the relationship between an input image and a labelled ground truth that classifies the pixels as belonging to a single class. Once the model is trained you can give it an input image and with a degree of accuracy it can predict where the pixels belong to a class lie. What this breaks down to is that you give the model an image and ask “Where are the people?” and the model will respond, by labeling the pixels with the people in it with a coloured mask.

What drove you to write AI based Rotobot plugin?

When I was working as a lighting technical director, if I was creating an environment extension to put behind characters in the foreground. I was unable to present my work until a mask of the people in the foreground had been created. There were two ways to do this, I could trace around the edge of the people myself, or wait for someone to do it for me. Either way I couldn’t present my work until the mask of the characters in the foreground had been created. When I saw a video of semantic segmentation on the web on the 11th of November 2017, I thought, “Wow that solves that problem I had when working as a lighting technical director”. So for a preview the quality of the mask is less important than the amount of effort it takes to produce the result. Having a machine compute the result is very little effort indeed.

During development of Rotobot Nuke plugin, which kinds of R&D you had gone through? Share experiences of early test shots.

Prototypes were created with fairly hard coded frameworks, that allowed the input material to be passed in from the command line. Then getting the framework housed inside the compositing application followed, a number of neural network frameworks and software stacks were explored. I kept using the same input footage of holiday videos from my travels over the years, using footage that I owned copyright over allows me to publish the work and to test again with different creative effects.

Which were the biggest technical challenges and how you overcame it?

Getting the various software components built on three operating systems proved difficult and training the model with increased accuracy took patience but I knew that if I persisted the result would get better, that is what the optimisation process of deep learning is all about. Increasing the resolution of the model means you cant fit the entire model during training on the GPU so you need to share the model across GPU and CPU.

Why only Foundry Nuke as the platform?

This isn’t the case, any package that is OpenFX compliant can run Rotobot. I have tested with Nuke, Natron, Scratch, Resolve Fusion. There is also command line OpenFX host called TuttleOFX which I want to test for larger batch operations of turnover of every piece of footage. I was in contact with another developer who wants to port it to After Effects.

Share your detailed thoughts (current and future) regarding deep learning / machine learning.

Much in the same way that computing power and memory increased for CPU and RAM in the 1990s until now, we will see similar trends for the machinery that runs deep learning, tensor computation.

This is typically on the GPU but we may see other co-processors that are just for AI, either training or inference, a fancy way of saying using the trained knowledge. If there is a problem with a pattern that can be labelled by hand, then chances are you can create a tensor based model that will predict that pattern. Its just a matter of coming up with labelled data to frame the question in a way that a neural network can learn the pattern.

How Rotobot Nuke plugin is different from After Effects’s Roto Brush, Mocha Pro’s Planar Tracking, Flobox’s rotoDRAW and other competition softwares of Roto and Paint?

The best part of Rotobot plugin is that it has very little need for human computer interaction, apart from a pixel coordinate or choosing a class and a mask colour, you just set it computing which can be a good and bad thing, without supervision if things go well you are all done, but until we get spline based results, it becomes a paint process to touch up the results.

Can we expect more ground breaking tools for Visual Effects from house of Kognat?

One thing I was initially interested was Style Transfer, like the Prisma app on mobile but at full resolution and to have a marked place for different looks. There are also other tools such as Generative Adversarial Networks, and simple things such as Noise Reduction or Super Resolution. All of these applications can be built from a deep neural network framework.

What can we expect from Rotobot Nuke plugin in near future?

GPU acceleration and conversion from raster based mask for sparse spline based curves. Should be next and more accurate training.

Please share download link of Rotobot Nuke Plugin from Kognat.

The official download link is: https://kognat.com/shop/

Free version with watermark output is available for free, for all three major 64 bit operating systems – Linux, Mac and Windows.

For all operating systems (with 12 month subscription), floating network license is priced at $380 and node locked license is priced at $130.

It will be very interesting to see how deep learning and machine language tools will bring technology revolution in our Animation & Visual Effects industry. For more technical details of deep learning, machine language, semantic segmentation and AI based neural networks, download official pdf by Sam Hodge, Kognat Software, titled ‘Automation of Rough Rotoscopy’.

[download id=”8660″]